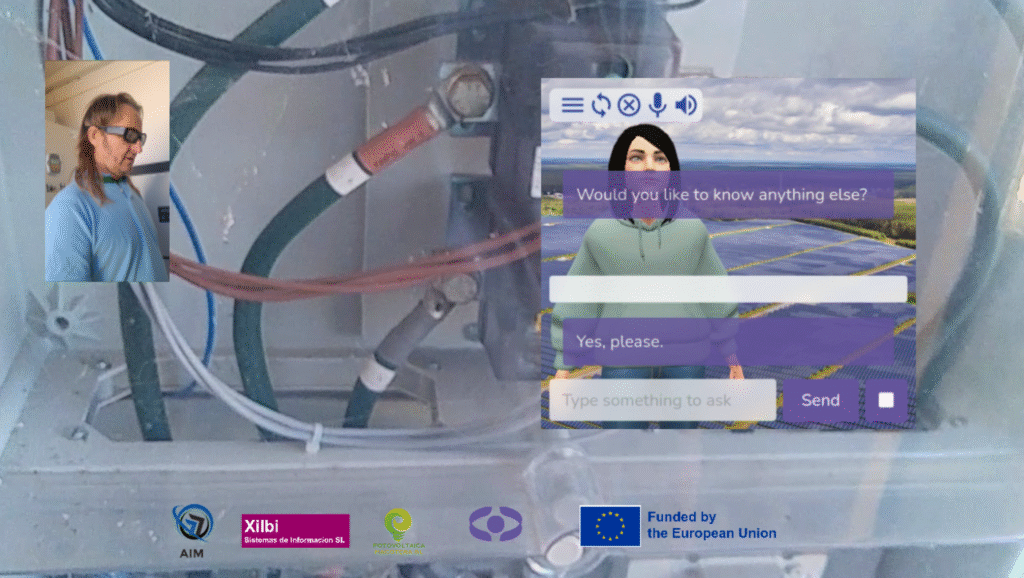

In this final interview, the AIM team details their triumphant Open Call 2 pilot, which successfully overcame the persistent challenge of maintaining robust Augmented Reality (AR) anchoring in remote areas. Discover the innovative approach and key findings that made this complex technical achievement possible.

1. Now that we reached the end of your pilots, what was the single biggest challenge you faced, and what was the most rewarding aspect of the experience?

The biggest challenge was keeping AR anchoring rock solid across very different environments while connectivity varied. We solved it with RTK positioning, tight IMU fusion, and an offline-first path that cached maps and Copernicus layers. The most rewarding part was seeing crews trust it in real work. Using the SERMAS toolkit helped here because its modular XR pipeline and sample scenes let us iterate fast on tracking and UI until the overlays stayed exactly where technicians expected.

2. Looking back at the milestones we discussed, which were successfully met, and which fell short? For those not fully achieved, what were the primary obstacles encountered? Are you happy with the final results?

We met the planned milestones on time, from development completion through the field demos and final validation. The few shortfalls were practical: bright sun forced a high-contrast theme, early voice commands needed trimming, and long sessions needed a power bank. I am happy with the outcome. SERMAS helped de-risk the schedule by giving us a baseline speech stack.

3. To what extent do you feel the pilot results aligned with your initial expectations?

They aligned closely with what we aimed in the technical proposal. We set targets for sub-4 cm positioning using RTK with OSNMA, at least a 25 percent improvement in asset location versus legacy methods, and strong user acceptance. The pilot met or exceeded those marks in real operations at the solar plant and in an urban street trial, so the delivered capability matches the plan rather than being a lab-only result.

4. What are the immediate next steps and long-term ones for your pilots?

Short term, we’re operationalising at the pilot sites: deployment playbooks, refresher training, Spanish and Portuguese voice packs, and a hardened offline mode so the core functions keep working without cloud links. We’re also packaging the WebView-based client so it remains hardware-agnostic. Long term, we’ll scale to more facilities and adjacent utilities, add remote expert assistance and in-field asset annotation, and fold the solution into a broader digital-twin stack that blends Copernicus layers, IoT, and work orders. A living-lab in Portugal is on our roadmap to fast-track demos, partner certification, and repeatable rollouts.

5. Thinking about the whole process of implementation of your solution, what it the ‘best practice’ you would suggest to other people willing to use the SERMAS toolkit to implement their VR solutions?

Design field-first and offline-first, then measure everything. Co-design with technicians, lock your KPIs early, and make sure the system degrades gracefully: cache your Copernicus tiles and models, run a small local server for bad-coverage days, keep a reduced command set that works without the network, and avoid device lock-in by wrapping SERMAS in a standard Android WebView. That combination is what turned a neat demo into a dependable tool in the field.