SERMAS Toolkit

What is it and what is expected?

What is it?

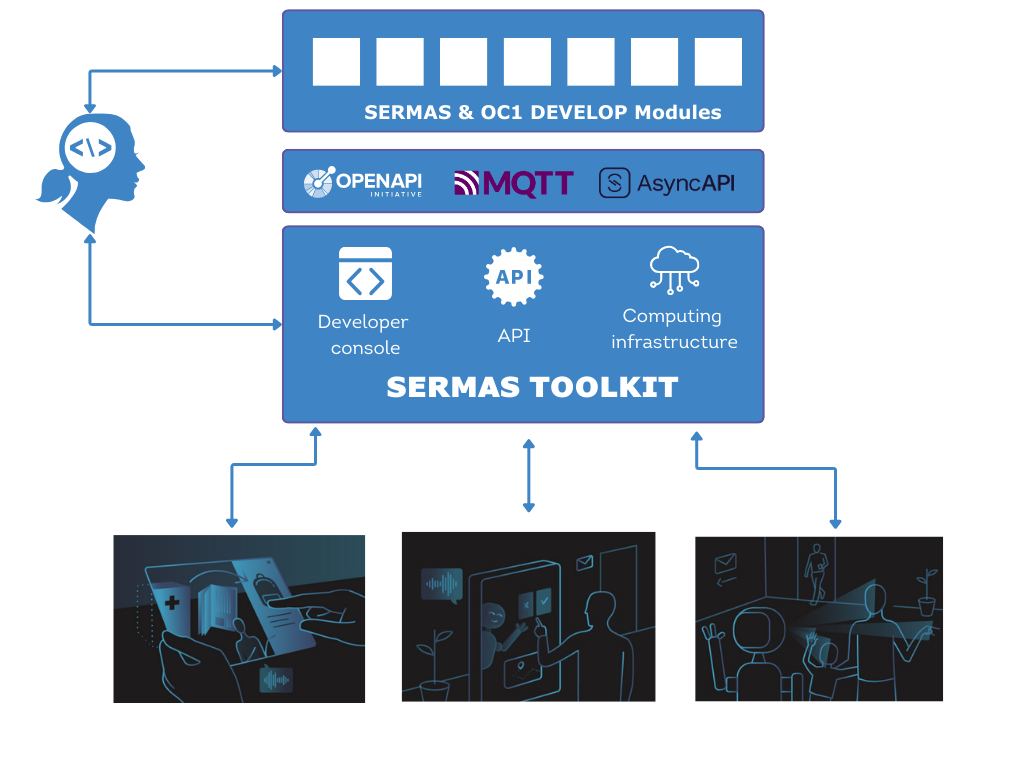

The toolkit is at the core of the SERMAS modular architecture and offers a set of features developers can use when creating new applications. The toolkit setup includes the allocation of resources like computing, GPUs, storage, and database systems.

XR Developers can login to the Toolkit Developer Console, create an agent, select modules (e.g. integrated dialogue capabilities, detection mechanisms for people and objects), and customise everything to be applied in different contexts.

What is expected?

How the SERMAS Toolkit will provide the integration point for supporting the SERMAS modules?

The Toolkit modules

Detection

The Detection module provides support to the perception of the world from different sensors, prioritizing on-edge processing to limit the transmission and potential diffusion of user data. This approach moves the computation near the sensor’s source leveraging dedicated AI algorithms that are adapted to work at the edge of the architecture.

This module is in charge of detecting:

• user’s intentions to interact with the agent

• user interacting with the agent and their pose, position, movements and emotions

• group of users interacting with the agent

• relevant features in the surrounding environment, such as objects the user needs to interact with.

Dialogue

The Dialogue module enables natural language interaction with the SERMAS XR Agent. It offers interfaces to speech-to-text, text to speech and large language models (LLMs) to interact with the user. Additionally, text-based interactions are available, for example via chat-like interfaces from touch screen or keyboard. The speech generation also integrates with speech synthesis markup language (SSML) features to express emotion via customization of the generated synthetic speech. Multilanguage support is enabled by direct user selection (and by direct spoken language detection, with lower reliability). The LLM model uses English as a middle language by translating to English before processing the user input and back to the request language while generating the response.

Robotics

The Dialogue module enables natural language interaction with the SERMAS XR Agent. It offers interfaces to speech-to-text, text to speech and large language models (LLMs) to interact with the user. Additionally, text-based interactions are available, for example via chat-like interfaces from touch screen or keyboard. The speech generation also integrates with speech synthesis markup language (SSML) features to express emotion via customization of the generated synthetic speech. Multilanguage support is enabled by direct user selection (and by direct spoken language detection, with lower reliability). The LLM model uses English as a middle language by translating to English before processing the user input and back to the request language while generating the response.

Security

The Security module oversees the process of authenticating users and authorizing the interaction between modules. The module relies on the open-source Keycloak identity and access management solution that offers an oAuth2 and OpenID compatible implementation and integrated user management. The access-control list (ACL) model is based on resource/scope definitions mapped to permissions that evaluate if Clients with specific assigned permission are allowed to access protected resources in a module.

Session

The Session module tracks the interaction of a user with an instance of the Agent. The Agent may be a digital instance of the avatar, the kiosk interface or robots. The Session module tracks events from different active agents, monitoring the state of the connected systems part of the same application.

UI

The UI module offers points of interaction to enable integrated multimodal UI features. It supports audio/video and text-only interfaces, offering a flexible approach to offer composable UIs to the agent instances. An agent implementing a rendering strategy will provide information based on the received event provided. Agents and application clients provide the events triggering content rendering. Taking as an example the avatar, it supports different rendering strategies such as video, image, text, links and webpages rendering.

XR

The XR module enables immersive interactions that are well connected to the state of the overall system, such as applications, user sessions and agent states. This module allows switching to an AR-based interaction model and leveraging the device’s natively supported features to expand the interaction and context awareness of the agents.

Platform

The Platform module offers supporting functionalities to the other modules and provides management APIs to handle application configurations and generic maintenance capability for the overall system. The core feature of the Platform module is to support the Application concept, which enables a user to create an avatar, register a new module (defining new resources and scopes) and create clients with specific permissions to be used in the agent’s implementation.

Ultimately, this module collects the features required by the Toolkit to enable the modular architecture and provides the functional components required to connect the Toolkit modules.

How we used the toolkit on our open calls?

Where to find information about the SERMAS Toolkit?

Documentation website

SERMAS on Github