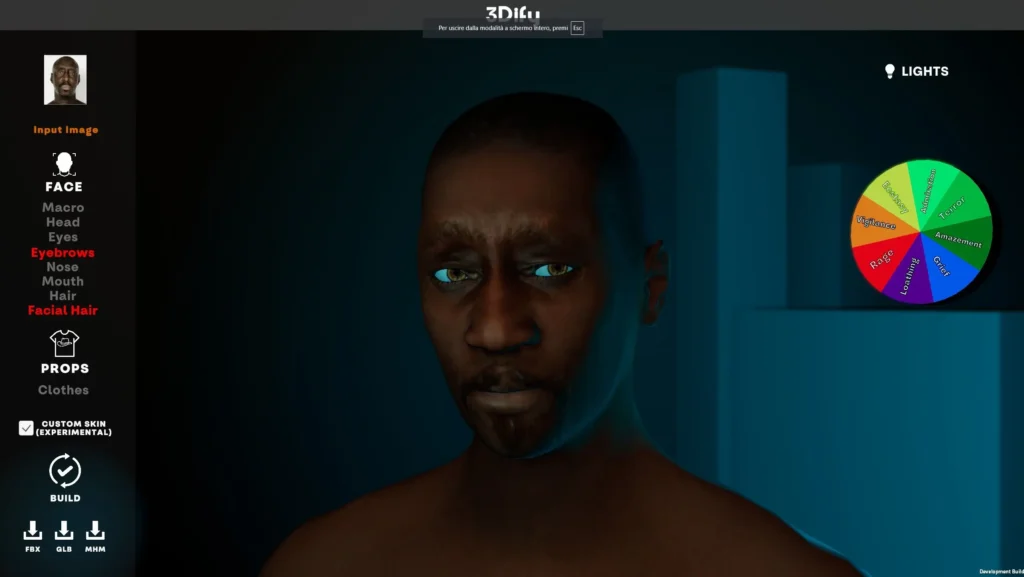

DW Innovation Highlights 3Dify & 3DforXR: Smart 3D for Media & Journalism

Interested in digital media, journalism, or content creation? Our partner Alexander Plaum from DW Innovation has written an article showcasing two of our sub-projects, 3Dify and 3DforXR. Both are designed for the smart creation of XR-ready 3D models and are free for anyone to use.

Celebrating Women in Extended Reality

Seven women from different EU-funded projects joined us for a special webinar.

An EU Project in light of the EU AI Act – The SERMAS Toolkit: a compliant High-risk AI System

What are the implications of the EU Artificial Intelligence (AI) Act for a project like SERMAS?

“Hey, we’ve been trying to meet you”

Our OC2-DEMONSTRATE is running and we are looking for you. Apply by 26 June 2024.

Unmasking the Unseen: Socio-Technical Security in XR Systems with Unwitting User Adversaries

Security experts typically view XR systems as technical constructs built on software processes, digital protocols, and cryptographic algorithms. Dive into our article about XR systems security.

Step into the world of XR with our joint interview featuring XR experts from 5 EU-funded projects!

“Five XR projects enter in a conference”…

SERMAS explores the world of XR Innovation at Immersive Tech Week 2023

The SERMAS team was at the heart of innovation at the Immersive Tech Week 2023 in Rotterdam.

The world of avatars is becoming more visible and intriguing by the hour

Enter the world of avatars, AI, metaverse platforms with the DW Innovation team.

Meet the partners: DW Innovation

For you to get to know the SERMAS team better, we have created a series of articles where we presented the main people running the project. The team from DW shared their thoughts about the project main results and objectives.

Meet the partners: SUPSI

For you to get to know the SERMAS team better, we have created a series of articles where we presented the main people running the project. The team from SUPSI shared their thoughts about the project main results and objectives.